The specter of disinformation looms over Canadian democracy, posing a greater threat than conventional forms of foreign interference, according to the findings of Justice Marie-Josée Hogue’s inquiry into foreign meddling. This alarming conclusion stems from a confluence of factors, including the rise of generative AI, the decline of traditional media, and the persistent attempts by foreign actors to undermine Canada’s democratic processes. While the inquiry focused on a range of interference tactics, from covert financial contributions to intimidation of diaspora communities, the prominence given to disinformation in the final report surprised some observers, given the limited public testimony on the subject. Hogue’s report, however, emphasizes that the danger lies not merely in swaying election outcomes, but in eroding the very foundations of democratic discourse by fostering distrust, division, and hindering compromise. This insidious erosion of public trust is deemed an “existential threat” to Canadian democracy.

The report cites specific instances of alleged disinformation campaigns, including those targeting former Conservative MP Kenny Chiu, Erin O’Toole’s 2021 campaign, and Prime Minister Justin Trudeau. While no state has been definitively linked to these campaigns, suspicions have been raised about the involvement of China and India, respectively. Despite these examples, evidence presented to the commission suggested that disinformation had minimal impact on the 2019 and 2021 elections. Research by the Media Ecosystem Observatory (MEO) found that while a significant amount of misinformation circulated, particularly concerning COVID-19 and voter fraud, it had limited traction due to low engagement and Canadians’ ability to identify false narratives. This relatively benign landscape, however, is likely to change drastically in the next election due to significant shifts in the social media landscape and the rise of AI-powered disinformation.

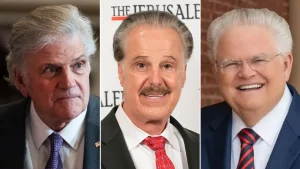

The control of major social media platforms like X, Facebook, and Instagram by Elon Musk and Mark Zuckerberg, figures with divergent views on content moderation, introduces a new level of uncertainty. Coupled with the rise of generative AI, this creates a fertile ground for large-scale disinformation campaigns, making the current communications infrastructure significantly more vulnerable. The ease and affordability of creating AI-generated disinformation, combined with its potential to reach vast audiences, poses a serious challenge to democratic processes worldwide, as evidenced by ongoing attempts to manipulate public opinion by both state and non-state actors. Hogue’s report underscores the scale of the disinformation problem, acknowledging the difficulty for Canada to address it single-handedly.

The report’s recommendations include establishing a government body to monitor open-source information, such as social media activity, to identify and mitigate disinformation. This proposal, however, raises concerns about privacy and the government’s ability to judge the veracity of information. The commission also emphasizes the importance of traditional media, with its established journalistic standards, in providing accurate information to the public. However, this recommendation comes against the backdrop of declining trust in traditional media among Canadians and the potential defunding of public broadcasters under a future Conservative government. The confluence of these factors paints a concerning picture for the future of informed democratic discourse in Canada.

The changing landscape of information dissemination, driven by the dominance of social media platforms and the emergence of generative AI, presents both challenges and opportunities. The ease with which malicious actors can leverage these technologies to spread disinformation at scale requires a concerted effort from governments and civil society to counter these threats. While the Hogue commission report serves as a starting point for this crucial conversation, translating these recommendations into effective policies and infrastructure will require careful consideration of the complexities involved. Balancing the need to combat disinformation with fundamental rights like privacy and freedom of expression will be a delicate task.

The urgency of addressing the disinformation threat cannot be overstated. As the next federal election approaches, the potential for manipulation of public opinion through AI-generated content and coordinated disinformation campaigns is a real and present danger. Building resilience against these threats requires not only government action but also a commitment from individuals to critically evaluate information and engage in a responsible manner with online content. The future of Canadian democracy hinges on the collective ability to navigate this rapidly evolving information environment and ensure that public discourse remains grounded in facts and reasoned debate.