The House Subcommittee on Government Weaponization has issued a stark warning about the potential for the federal government to leverage artificial intelligence (AI) for mass surveillance and censorship, thereby stifling dissent and infringing upon First Amendment rights. The subcommittee’s report, obtained exclusively by The Post, highlights the government’s significant investment in AI-powered tools capable of monitoring and censoring content, raising concerns about the erosion of free speech. The report draws parallels to instances abroad where AI has been deployed to suppress dissenting voices, particularly during the COVID-19 pandemic. Cases in the UK and Canada, including the trucker protests in Ottawa, are cited as examples of how AI can be used to monitor and control public discourse surrounding controversial issues. The subcommittee warns that similar tactics could be employed within the US, posing a serious threat to civil liberties.

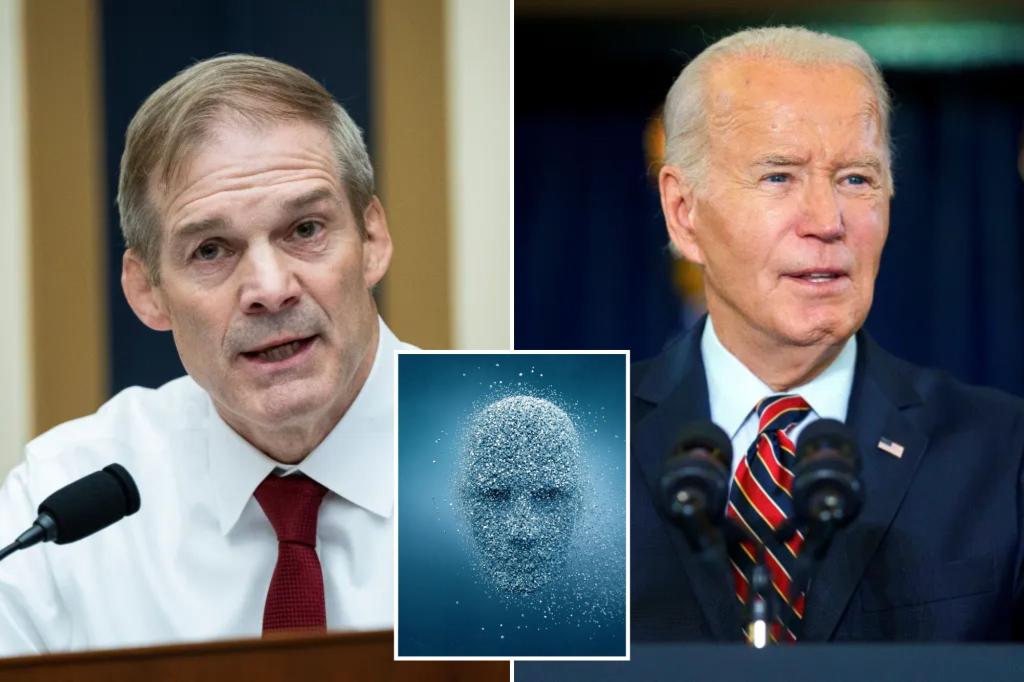

The report specifically scrutinizes the Biden administration’s focus on mitigating bias in AI, suggesting that these efforts may have unintended consequences. The controversy surrounding Google’s Gemini AI chatbot, which initially struggled to generate images of white people, including prominent figures like the Pope and George Washington, is presented as a potential example of how government pressure to address bias can lead to skewed outcomes. The subcommittee argues that the White House’s engagement with Google and other companies on “responsible AI” may have influenced the development of these models and contributed to perceived biases. This, the report contends, could create a pathway for the government to indirectly regulate and censor viewpoints it disfavors under the guise of promoting fairness and equity.

Furthermore, the subcommittee expresses concern that the Biden administration’s executive orders requiring AI companies to share training data with the government, ostensibly for safety and oversight purposes, could provide the government with undue influence over the AI market. While acknowledging the potential for AI abuse and the need for safeguards, the report warns that excessive government intervention could stifle innovation and create an environment where government-preferred biases become ingrained in AI models. This, in turn, could undermine Americans’ First Amendment rights by subtly shaping the information landscape and limiting exposure to diverse perspectives.

Beyond explicit regulations, the report also points to government funding of AI research focused on combating “misinformation” as a potential avenue for censorship. Grants from the National Science Foundation and the State Department’s Global Engagement Center for projects related to vaccine skepticism and behavior change campaigns are cited as examples of how government funding can be used to promote specific narratives and potentially suppress dissenting viewpoints. The subcommittee argues that this type of government involvement in AI development risks creating a system where only officially sanctioned information is amplified, further restricting free speech and open dialogue.

The report also highlights the potential for “voluntary” agreements between the government and AI companies to become a mechanism for control. Citing agreements with companies like OpenAI and Anthropic to provide pre-release access to AI models to government agencies, the subcommittee warns that such arrangements could lead to a chilling effect on innovation and free expression. These partnerships, coupled with the establishment of multi-agency task forces focused on AI safety and evaluation, raise concerns about the potential for government overreach and the blurring of lines between public and private sectors in the development and deployment of AI technologies. The subcommittee suggests that these seemingly voluntary collaborations could be subtly coercive, with companies fearing regulatory retaliation if they fail to comply with government requests.

To address these concerns, the Weaponization Subcommittee advocates for a hands-off approach by the government, urging it to refrain from interfering in the development of AI algorithms and datasets by private companies. The subcommittee also calls for an end to government funding for AI research related to content moderation and for the US to resist participating in global efforts to regulate lawful speech online. Furthermore, the subcommittee recommends rolling back federal regulatory authority over AI to prevent the government from exerting undue influence over this rapidly evolving technology. The subcommittee emphasizes the importance of protecting First Amendment rights in the digital age and warns against allowing the government to use AI as a tool for censorship and control. They champion their proposed Censorship Accountability Act, which mandates transparency from federal agencies regarding their communications with social media and AI companies about content moderation, as a critical step towards safeguarding free speech in the age of AI.