As the world navigates the complexities of elections in the age of artificial intelligence (AI), Meta, the technology giant formerly known as Facebook, has reported that the influence of AI-generated misinformation on its platforms during elections has been minimal. In response to concerns surrounding the potential manipulation of election outcomes, Meta has implemented significant defensive measures to detect and mitigate coordinated disinformation campaigns. Nick Clegg, Meta’s president of global affairs, emphasized that the company found little evidence of AI being effectively used by disinformation actors, particularly those utilizing generative AI as a tool for deception. By creating a multi-faceted approach to monitoring content, especially during critical electoral events in countries like the United States, India, and Brazil, Meta established multiple operations centers to address content-related issues and take swift action to disrupt allegations of misconduct.

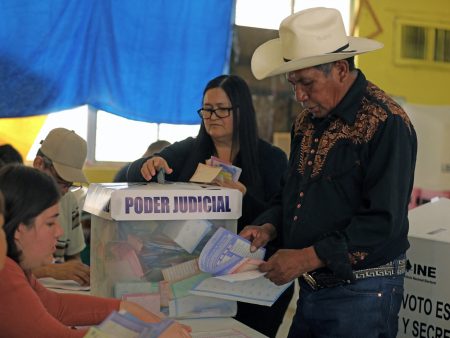

The nature of disinformation remains a significant challenge, with Meta identifying the majority of covert influence operations originating from countries such as Russia, Iran, and China. Since 2017, Russia has been recognized as the principal source of these operations, followed by Iran and China. Clegg reported that this year alone, Meta dismantled approximately 20 covert influence operations, with a particularly low occurrence of AI-generated misinformation. This included quick interventions to label and remove harmful content, reflecting a proactive stance taken by the company amidst the largest election year in history, during which an estimated 2 billion people participated in elections globally. Despite initial apprehensions regarding the effects of AI on public perception during the election cycle, the overall conclusion from Clegg’s comments was that while AI-generated content was present, its impact was “modest and limited in scope.”

In light of the discourse around AI usage in politics, public sentiments have leaned towards skepticism. A Pew Research survey indicated that the general populace fears predominantly negative consequences of AI in the upcoming 2024 elections, with many assuming AI technologies would be more harmful than beneficial. This apprehension spurred the Biden administration to launch a national security strategy emphasizing the importance of maintaining leadership in AI development while ensuring safety and accountability. Biden’s approach, marked by a focus on national security and responsible AI utilization, reflects a growing acknowledgment of the potential threats posed by rapid technological advancements in the realm of misinformation.

Simultaneously, Meta’s reputation continues to face scrutiny, as the company juggles accusations of censorship alongside its efforts to control misinformation on its platforms. In recent instances, organizations like Human Rights Watch have accused Meta of silencing pro-Palestine voices, linking this to a broader trend of social media censorship since the Hamas-Israel conflict escalated. Despite these criticisms, Meta aims to present itself as a facilitator of positive political discourse, directing users towards legitimate information sources concerning candidates and voting processes. By discouraging misleading claims and potential threats of violence associated with election-related content, Meta strives to balance user freedom with community safety, although this sometimes leads to unintended consequences such as mistaken removals of legitimate content.

Addressing the topic of censorship, Republican lawmakers have expressed concerns about perceived bias against conservative viewpoints on social media. Prominent figures like President-elect Donald Trump have been particularly vocal about their grievances regarding content moderation on platforms like Meta. In communication with the House of Representatives, Mark Zuckerberg acknowledged some regret over specific content removals that followed the Biden administration’s pressure. This acknowledgment reflects a tension between the platform’s efforts to regulate content and the political narratives that consumers of social media expect, suggesting a need for clear guidelines and transparency in content moderation practices that can stand up to political scrutiny.

As the conversation around technology and elections continues to develop, Clegg mentioned Zuckerberg’s ambition to engage with the incoming administration to shape technology policy effectively. This pursuit illustrates a strategic interest in maintaining relevance in discussions crucial to the evolving landscape of digital communication, particularly in AI’s role in shaping public opinion and discourse. Furthermore, it points to the increasing need for collaborative efforts between technology companies and government sectors to address the multifaceted implications of AI in electoral politics. While the threat of misinformation remains, early assessments demonstrate that with appropriate legislative and corporate strategies, the potential for AI to disrupt electoral integrity may be curtailed, albeit not entirely eliminated.