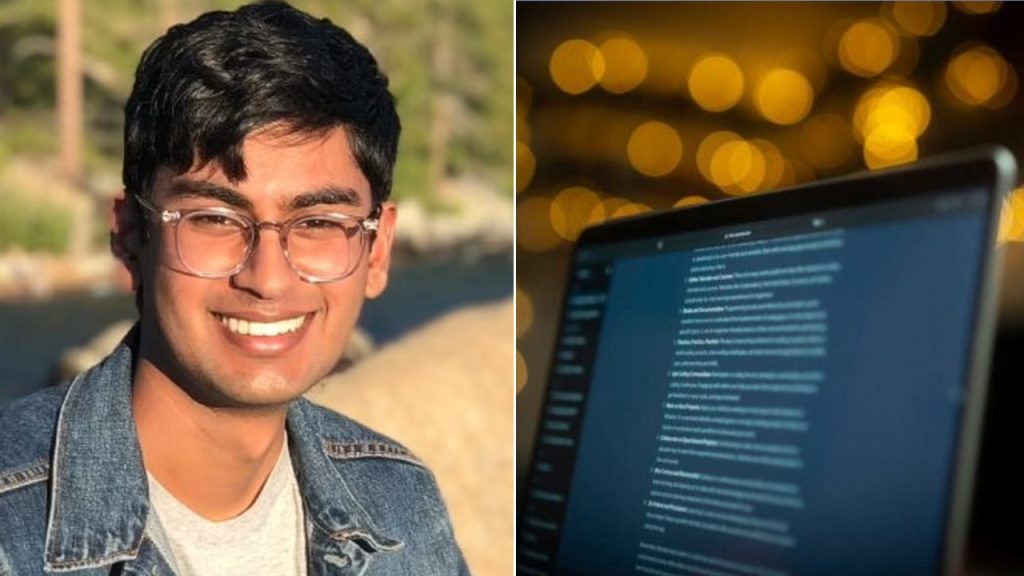

The Tragic Death of Suchir Balaji: An AI Researcher’s Growing Concerns and Untimely End

Suchir Balaji, a 26-year-old AI researcher formerly employed by OpenAI, was found dead in his San Francisco apartment on November 26, 2024. The official ruling was suicide, and while the San Francisco Police Department found no evidence of foul play, Balaji’s mother, Poornima Ramarao, is pushing for a deeper investigation. She believes the circumstances surrounding her son’s death are not typical and has commissioned a private autopsy, the results of which she describes as concerning. Ramarao is working with an attorney to encourage a more thorough investigation by the police, hoping to uncover any overlooked details and bring clarity to the situation.

Balaji’s death came just three months after he publicly accused OpenAI of copyright infringement in the development of its groundbreaking AI chatbot, ChatGPT. He had voiced his concerns in an interview with The New York Times, aligning his perspective with a lawsuit filed by the newspaper against OpenAI and Microsoft. The lawsuit alleged that the companies illegally used millions of copyrighted articles to train their AI models, creating a direct competitor to the news outlet. Balaji was identified in court documents as possessing crucial information relevant to the case, adding another layer of complexity to the unfolding tragedy.

Balaji’s journey into the world of AI began with a sense of optimism and a desire to contribute to technologies that could benefit humanity. He was drawn to OpenAI’s initial open-source philosophy, believing in the potential of AI to solve complex problems like disease and aging. However, his perspective shifted dramatically following the launch of ChatGPT and the company’s increasing focus on commercialization. He became disillusioned, expressing concerns that the technology he had helped develop was becoming more of a detriment than a benefit to society.

Balaji confided in his mother that AI’s negative repercussions were becoming apparent much sooner than he had anticipated. He expressed disappointment that instead of solving global challenges, chatbots were threatening the livelihoods of writers and creators whose work was used to train these systems. He viewed this as an unsustainable model for the internet ecosystem, raising ethical questions about the use of copyrighted material in AI development. His disillusionment grew to the point where he felt compelled to leave OpenAI, unwilling to further contribute to what he perceived as a harmful technology.

Balaji’s concerns extended specifically to the legal and ethical implications of using copyrighted material to train AI models. He challenged the assertion by OpenAI and Microsoft that their practices fell under "fair use," arguing that the AI-generated content directly competed with the original copyrighted material. He publicly voiced these concerns on social media, detailing his evolving understanding of copyright law and expressing his belief that fair use was an inadequate defense for many generative AI products. This public stance further solidified his position as a key figure in the ongoing legal battles surrounding AI and copyright.

Balaji’s death has cast a shadow over the rapidly evolving field of artificial intelligence, raising questions about the ethical implications of AI development and the potential consequences of unchecked technological advancement. His story highlights the internal struggles faced by those working at the forefront of this transformative technology, caught between the promise of innovation and the growing awareness of its potential downsides. The ongoing legal battles and the tragic circumstances of Balaji’s death underscore the need for a deeper societal conversation about the responsible development and deployment of AI, ensuring that its benefits are maximized while mitigating potential harms. His mother’s plea for a more thorough investigation serves as a poignant reminder of the human cost of technological progress and the importance of seeking truth and accountability.